Large Language Models (LLMs) are revolutionizing the tech world—powering everything from chatbots and summarization tools to code assistants and customer support. As AI adoption grows, developers often find themselves juggling multiple LLMs across different providers to meet varied use cases.

But here’s the real challenge:

How can you deliver high-performance AI solutions while managing cost, latency, reliability, and privacy—without becoming a DevOps expert?

What is an LLM Router?

Think of an LLM router as a smart dispatcher that sits between your application and various AI model providers. It routes each request—like a prompt or code task—to the most appropriate model based on real-time performance and cost.

An LLM router helps you:

- Choose the best model for each task (e.g., summarization vs. code generation)

- Automatically switch providers in case of failures or high latency

- Balance speed, reliability, cost, and data privacy

- Avoid vendor lock-in and maximize model diversity

In simple terms, it removes the complexity of managing multiple APIs while boosting efficiency and performance.

Why Use an LLM Router?

Without a router, relying on a single provider leaves you vulnerable to:

- Vendor Lock-in: Sudden pricing changes or outages can disrupt your service

- Limited Cost Flexibility: You may overpay for features available cheaper elsewhere

- Task-Mismatch: Some models are better suited for specific tasks like coding, chatting, or summarization

- Privacy Concerns: Especially in the EU, using non-GDPR-compliant providers risks legal issues

- Reduced Flexibility: You miss out on the rapidly evolving LLM ecosystem

Benefits of using an LLM Router:

- Smart routing across providers

- Automatic failover and redundancy

- Real-time optimization for cost and performance

- Support for compliance and data governance

- Task-specific routing for better results

Comparing LLM Routers: Smarter AI Model Routing for Better Performance & Compliance

Let’s look at some of the most popular LLM routers in the market and how they stack up:

1. Cortecs

Pros:

- Fully GDPR-compliant

- Strong support for European LLM providers

- Automatic failover for high availability

Cons:

- Primarily focused on the EU market

- May lack global provider diversity

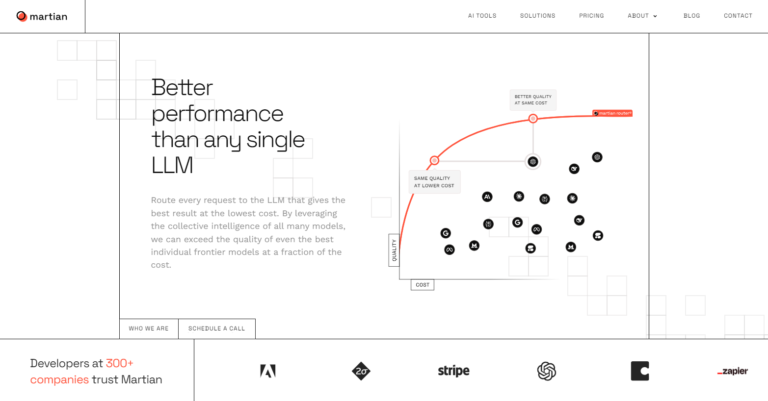

2. Withmartian

Pros:

- Dynamic routing to the best-performing model per query

- Excellent cost efficiency

- In some tests, outperforms even GPT-4

Cons:

- Complex pricing for advanced usage

- Not suitable for European users (GDPR non-compliant)

3. Requesty

Pros:

- Unified API for many providers

- Transparent cost tracking and usage metrics

- Smart routing for cost optimization

Cons:

- Initial setup can be complex

- Some latency due to internal classification logic

- Not GDPR-compliant for EU use

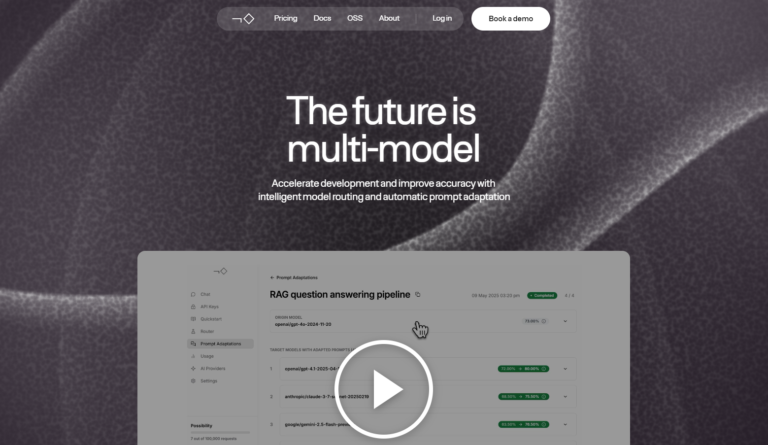

4. NotDiamond

Pros:

- Uses a machine learning model (Random Forest) for intelligent routing

- Fine-tune cost vs. performance via threshold settings

- Supports custom router training for tailored use cases

Cons:

- More technical complexity in training custom models

- Sparse documentation on pricing

- Not usable in GDPR-regulated regions

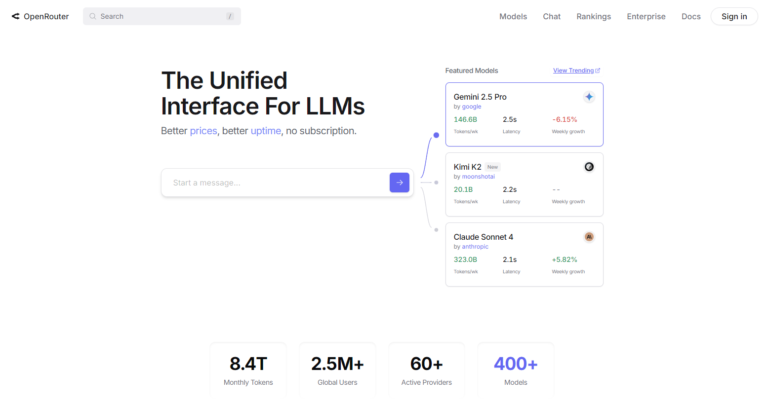

5. OpenRouter

Pros:

- Access multiple models via a single API

- Broad model support from top providers

- High availability with built-in fallback options

Cons:

- Raises some data privacy concerns

- Lacks GDPR compliance for European deployments

Read Also: Top Open-Source LLMs in 2025

Final Thoughts

If you’re building AI-powered applications at scale, LLM routers are essential. They help you:

- Cut costs by choosing affordable models

- Improve reliability through failovers

- Stay compliant with data privacy regulations

- Tap into the strengths of various models across providers

Whether you’re optimizing for price, speed, or security, an LLM router gives you the flexibility and control needed to succeed in today’s AI-driven world.